Practical GenAI LLM Prompt Engineering: The latest practices for everyone

In Part 1 of this series, The Evolution of GenAI LLM Prompt Engineering: From Zero-Shot to Chain of Thought, and as part of the Viewyonder project, Find your GenAI happy path, we took a journey together by discussing the evolution of prompt engineering over the past couple of years.

Here we are. After Part 1, slightly out of breath, but stood together in a clearing. In this, part 2, we’ll walk through an example using the “latest” prompt engineering techniques to solve a common business process (communication of ideas, otherwise known as content creation). We’ll also provide other examples that include consultancy, app development, and a business loan application.

- Prompt engineering for an article on prompt engineering

- The human: weakest link in prompt engineering?

- 5 essential ingredients for a tasty prompt

- Prompt chaining: Get the LLM to create your prompt for you

- What happens next?

- 5 useful applications of prompt engineering

- Use case 1: Business loan application

- Use case 2: Develop a mobile app MVP.

- Use case 3: Help with WordPress administration and information architecture

- Use case 4: Repeating previous work for a new client

- Use case 5: Learning a new topic

- How could this article be improved?

- Useful prompt engineering resources

Prompt engineering for an article on prompt engineering

It’s a bit ouroboros, but it kills two birds with one stone because you are experiencing the result while learning about how it was created. Nifty, eh?

So, we need to write an article on the practical applications of prompt engineering for people who are relatively new to prompt engineering. They might hate the phrase prompt engineering. They might be on a different stages of the evolution of prompt engineering:

- Could be still firing off zero-shot prompts and being grumpy with the results, thinking GenAI is crap….

- …all the way to…

- Putting detailed prompts into GenAI with examples.

But before we start on GenAI, we need to begin with the human: who are you?

The human: weakest link in prompt engineering?

Are you a novice or a subject matter expert in the task at hand? It matters.

If you’re a novice then how will you know what to ask the LLM to do? What examples will you have? Your input to the LLM is likely to be weak. In this case, you need to start by collaborating with the LLM to learn something about the task in hand. You need to do YOUR research (with the LLM to help). Remember that LLMs hallucinate (it’s how they work, it’s not a fault). And you are both the guide and the ultimate arbiter of truth and quality.

If you’re a subject matter expert, then I have great news for you. With practice, your prompts will be excellent and you will get 10x the value out of an LLM compared to a novice.

Which brings us to our first truth about the human’s impact on the LLM and prompt engineering results:

The more expert the human is the subject, and the better they are at prompt engineering and understanding how an LLM works, and if they really understand the final goal and the audience and what quality is — they will 10-100x the results compared to a naive, novice user.

But wherever you’re starting from, as long as you’re aware there’s always a path forward to get better.

5 essential ingredients for a tasty prompt

The more you can “see” the end result you’re after, the better, but don’t worry too much about this because this is a bit of a journey and even if you have an idea of the end result, the journey is likely to change that — who hasn’t felt different after a walk by the coast? 🙂

Here’s a list of things for you to put together – you can work with an LLM to build this, but sketching it down is a good start.

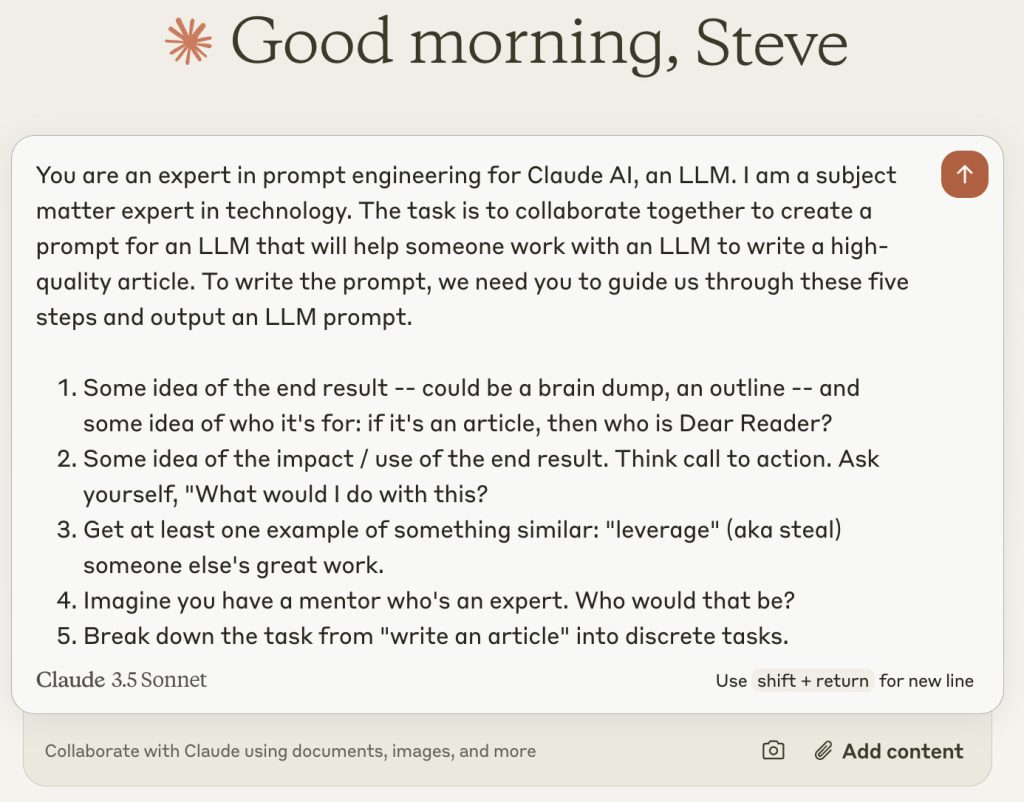

- Some idea of the end result — could be a brain dump, an outline — and some idea of who it’s for: if it’s an article, then who is Dear Reader?

- Some idea of the impact / use of the end result. Think call to action. Ask yourself, “What would I do with this?

- Get at least one example of something similar: “leverage” (aka steal) someone else’s great work.

- Imagine you have a mentor who’s an expert. Who would that be?

- Break down the task from “write an article” into discrete tasks.

Prompt chaining: Get the LLM to create your prompt for you

Sneaky, eh? If you’re new to prompt engineering, this might feel like cheating. You start by getting the LLM to help your write the prompt that it’s going to use. This is called “prompt chaining” where you don’t just do one prompt, but get the LLM to iterate through prompts to get to the final answer.

I’m using Anthropic AI’s Claude. I find it the best for my work (writing, planning, coding).

Our prompt:

ClaudeAI’s response:

Certainly, I'd be happy to guide you through the process of creating a prompt for an LLM to help someone write a high-quality article. Let's go through the five steps you've outlined:

End result and audience:

Let's brainstorm on this. What kind of article are we aiming for? Is it a technical piece, a how-to guide, or perhaps an opinion piece on a current tech trend? And who is our target audience - tech enthusiasts, industry professionals, or the general public?

Impact and call to action:

What do we want readers to do after reading this article? Learn a new skill, change their perspective on a tech issue, or perhaps make a decision about adopting a new technology?

Similar examples:

Can you think of any articles in the tech space that you consider high-quality and would like to emulate? It could be from reputable tech publications, blogs, or even academic journals.

Expert mentor:

In the technology field, who would you consider an ideal mentor for writing such an article? It could be a renowned tech journalist, a respected industry leader, or an academic in a relevant field.

Breaking down the task:

Here's an initial breakdown of the task of writing an article:

a. Research the topic thoroughly

b. Develop a detailed outline

c. Write an engaging introduction

d. Expand on main points with supporting evidence

e. Craft a compelling conclusion

f. Edit and refine the content

g. Add relevant examples, case studies, or data visualizations

h. Proofread for grammar and clarity

Could you provide your thoughts on these points? Once we have your input, we can craft a comprehensive prompt for the LLM.

My responses:

End result and audience: The audience is leaders or practitioners in the technology industry, but not necessarily technical people. It’s a guide how to use prompt engineering with an LLM to create an article, then give other examples.

Impact and call to action: We want readers to use this article to apply prompt engineering in their daily work. If they are novices, we want them to have confidence and clarity to get more out of LLMs. If they are experts, then they can see how to use LLMs to speed up their work and productivity.

Similar examples: I will use just one small selection of the content in this blog post: 1. Be specific as possible. https://www.digitalocean.com/resources/articles/prompt-engineering-best-practices

Expert mentor: One of the experts in the field is Mike Taylor, who wrote the book “Prompt Engineering for Generative AI”.

And already we have a prompt to put into (chain into) the next LLM:

# Prompt: Create a Comprehensive Guide on Using Prompt Engineering with LLMs for Article Creation

## Context and Audience

You are an expert in prompt engineering for Large Language Models (LLMs). Your task is to create a comprehensive guide on how to use prompt engineering with LLMs to write high-quality articles. The target audience is leaders and practitioners in the technology industry who may not necessarily have technical backgrounds.

## Article Objectives

1. Provide a clear, step-by-step process for using prompt engineering to create articles with LLMs.

2. Offer practical tips and best practices for effective prompt engineering.

3. Include examples of well-crafted prompts and their resulting outputs.

4. Address common challenges and how to overcome them.

5. Discuss the potential impact of this approach on productivity and content quality.

## Article Structure

1. Introduction

- Define prompt engineering and its relevance to article creation

- Briefly explain the benefits of using LLMs for content generation

2. Understanding the Basics of Prompt Engineering

- Key principles of effective prompts

- Common pitfalls to avoid

3. Step-by-Step Guide to Crafting Prompts for Article Creation

- Defining the article's purpose and audience

- Outlining the article structure

- Creating specific prompts for each section

- Iterating and refining prompts

4. Best Practices for Prompt Engineering in Article Writing

- Being as specific as possible (expand on the example from DigitalOcean)

- Providing context and examples

- Specifying tone, style, and format

- Balancing guidance and creative freedom for the LLM

5. Case Studies and Examples

- Showcase 2-3 examples of prompts used to generate different types of articles

- Analyze the prompts and resulting outputs

6. Overcoming Common Challenges

- Dealing with inconsistencies or inaccuracies

- Managing article length and depth

- Maintaining a consistent voice throughout the article

7. Maximizing Productivity with Prompt Engineering

- Strategies for efficient prompt creation and iteration

- Integrating LLM-generated content into your workflow

8. Ethical Considerations and Best Practices

- Ensuring originality and avoiding plagiarism

- Fact-checking and verifying LLM-generated content

- Disclosure of AI assistance in content creation

9. Conclusion

- Recap of key takeaways

- Encouragement for readers to experiment with prompt engineering

## Style and Tone

- Use a professional yet approachable tone, suitable for technology industry leaders and practitioners.

- Balance technical information with practical, actionable advice.

- Include relevant examples and analogies to illustrate complex concepts.

## Additional Requirements

- Incorporate insights from Mike Taylor's book "Prompt Engineering for Generative AI" where relevant.

- Include at least one visual element, such as a flowchart or diagram, to illustrate the prompt engineering process for article creation.

- Provide a "Quick Start Guide" or summary section for readers who want to implement the techniques immediately.

- Suggest 2-3 exercises or challenges for readers to practice their prompt engineering skills.

Remember to emphasize how this guide will help both novices gain confidence in using LLMs and experts increase their productivity. The article should empower readers to apply prompt engineering techniques in their daily work, particularly for content creation tasks.At this point I would normally tune this prompt before using it, but for this illustration I’ll just go with it. What tunings?

- Always start paragraphs with the active voice. Only use passive voice occasionally and for effect.

- Write the article like it’s a conversation. Occasionally ask some questions of the reader on a key points.

- Avoid words like delve, tapestry….(provide list)

- ….build up your library of personal LLM tunings.

We can keep this going in the same ClaudeAI session or start a new one. If you keep using the same session, you use up more tokens faster because it sends the whole session so far back to the LLM (that’s how the LLM “remembers”). So I’m going to start a new ClaudeAI session and put this prompt in.

Note the classic LLM tell in the introduction 😂 This is why using LLMs is part, not all, of your job. If you use this stuff as-is, then quite frankly you’re lazy and an idiot. You’re supposed to take this and make it yours. That’s the human bit: you top and tail this process, and the LLM just speeds up the bits in the middle == more productivity and higher quality.

# Comprehensive Guide: Using Prompt Engineering with LLMs for Article Creation

## Introduction

In today's fast-paced digital landscape, content creation is more crucial than ever. However, producing high-quality articles consistently can be time-consuming and challenging. Enter prompt engineering for Large Language Models (LLMs) – a game-changing approach that's revolutionizing how we create content.

Prompt engineering is the art and science of crafting effective instructions or queries for LLMs to generate desired outputs. When applied to article creation, it allows you to harness the power of AI to produce well-structured, informative, and engaging content efficiently.

By leveraging LLMs for content generation, you can:

- Dramatically reduce the time spent on initial drafts

- Overcome writer's block and generate fresh ideas

- Maintain consistency across multiple pieces of content

- Scale your content production without sacrificing quality

This guide will walk you through the process of using prompt engineering with LLMs to create high-quality articles, providing you with the tools and knowledge to enhance your content creation workflow.

## Understanding the Basics of Prompt Engineering

Before diving into the specifics of article creation, it's essential to grasp the fundamental principles of effective prompt engineering.

### Key Principles of Effective Prompts

1. **Clarity**: Be specific and unambiguous in your instructions.

2. **Context**: Provide relevant background information to guide the LLM's understanding.

3. **Structure**: Organize your prompt logically, often mirroring the desired output structure.

4. **Constraints**: Set clear boundaries for the LLM's response, such as word count or style.

5. **Examples**: When appropriate, include sample outputs to illustrate your expectations.

### Common Pitfalls to Avoid

1. **Vagueness**: Avoid broad or unclear instructions that can lead to unfocused outputs.

2. **Overcomplication**: Don't overwhelm the LLM with unnecessary details or conflicting requirements.

3. **Assuming knowledge**: Don't expect the LLM to have specific contextual information unless provided.

4. **Ignoring tone**: Failing to specify the desired tone can result in inconsistent writing styles.

5. **Neglecting iteration**: Treat prompt engineering as an iterative process, refining based on results.

## Step-by-Step Guide to Crafting Prompts for Article Creation

### 1. Define the Article's Purpose and Audience

Start by clearly articulating:

- The main objective of the article

- The target audience and their level of expertise

- Key takeaways or desired reader actions

Example prompt component:

```

Create an article for technology professionals with 5+ years of experience, explaining the benefits of edge computing in IoT applications. The article should motivate readers to consider implementing edge computing in their projects.

```

### 2. Outline the Article Structure

Provide a clear structure for the LLM to follow:

- Specify the main sections of the article

- Indicate any subsections or key points to be covered

- Define the desired length for each section

Example prompt component:

```

Structure the article as follows:

1. Introduction (100 words)

2. What is Edge Computing? (150 words)

3. Benefits of Edge Computing in IoT (300 words)

- Reduced latency

- Improved security

- Bandwidth conservation

4. Real-world Examples (200 words)

5. Implementation Challenges and Solutions (250 words)

6. Conclusion (100 words)

```

### 3. Create Specific Prompts for Each Section

Develop detailed prompts for individual sections, incorporating:

- Specific questions or topics to be addressed

- Any required data, statistics, or references

- Desired tone and writing style

Example prompt for a section:

```

For the "Benefits of Edge Computing in IoT" section:

- Explain how edge computing reduces latency in IoT applications. Provide a specific example, such as in autonomous vehicles or industrial automation.

- Discuss the security advantages of processing data at the edge. Mention encryption and reduced data transmission.

- Describe how edge computing conserves bandwidth. Include a brief explanation of how this can lead to cost savings.

Use a professional tone with technical details, but ensure the explanations are accessible to a general technology audience.

```

### 4. Iterate and Refine Prompts

After generating initial content:

- Review the output for accuracy, relevance, and style

- Identify areas that need improvement or expansion

- Adjust your prompts to address any shortcomings

Example refinement prompt:

```

The section on implementation challenges lacks specific examples. Please revise this section to include at least two real-world examples of challenges faced by companies implementing edge computing in IoT, and describe how they overcame these challenges.

```

## Best Practices for Prompt Engineering in Article Writing

1. **Be as Specific as Possible**

- Use precise language and avoid ambiguity

- Specify exact requirements for formatting, tone, and content

Example: Instead of "Write about cloud computing," use "Explain the top 5 benefits of cloud computing for small businesses, including cost savings and scalability. Provide a real-world example for each benefit."

2. **Provide Context and Examples**

- Offer background information relevant to the topic

- Include sample phrases or paragraphs to guide style and tone

3. **Specify Tone, Style, and Format**

- Clearly state the desired writing style (e.g., formal, conversational, technical)

- Indicate any formatting requirements (e.g., use of bullet points, headings)

4. **Balance Guidance and Creative Freedom**

- Provide enough direction to ensure relevant content

- Allow room for the LLM to generate creative ideas and connections

5. **Use Chain-of-Thought Prompting**

- Break down complex tasks into smaller, logical steps

- Guide the LLM through a reasoning process

Example: "To explain machine learning to a non-technical audience:

1. Start with a simple, relatable analogy

2. Break down the core concepts of data, algorithms, and prediction

3. Provide a step-by-step example of how a machine learning model might work

4. Discuss real-world applications

5. Address common misconceptions"

6. **Leverage Role-Playing**

- Assign a specific role or expertise to the LLM

- Frame the task from the perspective of that role

Example: "As a seasoned cybersecurity expert with 15 years of experience, write an article explaining the importance of multi-factor authentication to C-level executives."

## Case Studies and Examples

### Case Study 1: Technical Blog Post

Prompt:

```

Write a technical blog post for software developers about the benefits of containerization in microservices architecture. The article should be 800-1000 words long and include the following sections:

1. Introduction: Define containerization and microservices

2. Benefits of Containerization in Microservices:

- Isolation and consistency

- Scalability and resource efficiency

- Faster deployment and updates

3. Popular Containerization Tools: Brief overview of Docker and Kubernetes

4. Best Practices for Implementing Containerization in Microservices

5. Potential Challenges and Solutions

6. Conclusion: Future of containerization in microservices

Use a professional tone with technical details, but ensure explanations are clear for junior to mid-level developers. Include at least one code snippet or configuration example.

```

Analysis: This prompt provides a clear structure, specifies the target audience, and outlines key points to be covered. It also sets expectations for tone, length, and the inclusion of technical elements like code snippets.

### Case Study 2: Thought Leadership Article

Prompt:

```

Create a thought leadership article on the future of artificial intelligence in healthcare. The article should be written from the perspective of a visionary tech CEO and should be suitable for publication in a leading business technology magazine. Follow these guidelines:

1. Length: 1200-1500 words

2. Tone: Visionary, authoritative, yet accessible to non-technical C-suite executives

3. Structure:

- Introduction: Hook readers with a compelling vision of AI-driven healthcare

- Current State: Brief overview of AI applications in healthcare today

- Future Predictions: Focus on 3-4 major trends or breakthroughs expected in the next 5-10 years

- Challenges: Address ethical concerns and implementation hurdles

- Call to Action: Inspire healthcare and tech leaders to collaborate and innovate

Include at least two hypothetical scenarios or case studies illustrating the potential impact of AI in healthcare. Use analogies or metaphors to explain complex concepts. Conclude with a powerful statement about the transformative potential of AI in improving global health outcomes.

```

Analysis: This prompt sets a specific context (thought leadership), target audience (C-suite executives), and publication type (business technology magazine). It provides a clear structure and guidelines for tone and content, encouraging the use of storytelling techniques like scenarios and analogies to engage readers.

## Overcoming Common Challenges

1. **Dealing with Inconsistencies or Inaccuracies**

- Solution: Use fact-checking prompts to verify key information

- Example: "Review the article for factual accuracy, focusing on statistics and technical claims. List any statements that require verification, and suggest reliable sources for fact-checking."

2. **Managing Article Length and Depth**

- Solution: Provide specific word count guidelines and use prompts to expand or summarize content

- Example: "The current draft is 2000 words, but we need it to be 1500 words. Please revise the article, focusing on the most crucial information and removing any redundant content while maintaining the key messages."

3. **Maintaining a Consistent Voice Throughout the Article**

- Solution: Define the voice clearly in your initial prompt and use consistency-check prompts

- Example: "Review the article for consistency in tone and voice. It should maintain a professional yet approachable style throughout. Highlight any sections that deviate from this voice and suggest revisions."

## Maximizing Productivity with Prompt Engineering

1. **Create a Prompt Template Library**

- Develop a collection of effective prompts for different article types and sections

- Regularly update and refine your templates based on results

2. **Use Incremental Prompting**

- Break down the article creation process into smaller tasks

- Use a series of prompts to gradually build and refine your article

3. **Implement a Review and Refinement Workflow**

- Generate initial content with broad prompts

- Use specific follow-up prompts to enhance, fact-check, and polish the content

4. **Combine Human Expertise with LLM Capabilities**

- Use LLMs for initial drafts and idea generation

- Apply human expertise for high-level editing, fact-checking, and adding unique insights

## Ethical Considerations and Best Practices

1. **Ensuring Originality and Avoiding Plagiarism**

- Use plagiarism-check prompts to identify potential issues

- Example: "Please check this article for any content that may be too similar to existing publications. Highlight any sections that should be rewritten for originality."

2. **Fact-Checking and Verifying LLM-Generated Content**

- Implement a systematic fact-checking process

- Use prompts to generate citations and source recommendations

3. **Disclosure of AI Assistance in Content Creation**

- Be transparent about the use of AI in your content creation process

- Consider adding a disclaimer or explanation of your AI-assisted workflow

## Quick Start Guide

1. Define your article's purpose, audience, and key messages

2. Create a detailed outline with specific sections and word counts

3. Develop prompts for each section, including context and examples

4. Generate initial content using your prompts

5. Review and refine the content using iterative prompts

6. Fact-check and edit the final draft

7. Add human insights and polish the article

## Exercises to Practice Prompt Engineering Skills

1. **Headline Generator**

Task: Create a prompt that generates 5 engaging headlines for an article about sustainable technology innovations.

2. **Section Expander**

Task: Write a prompt that takes a brief paragraph about a topic and expands it into a fully-developed section with examples and data points.

3. **Style Transformer**

Task: Develop a prompt that takes an existing technical article and transforms it into a more accessible version for a general audience, maintaining the key information.

## Conclusion

Prompt engineering for article creation with LLMs is a powerful skill that can significantly enhance your content production workflow. By mastering the art of crafting effective prompts, you can harness the capabilities of AI to generate high-quality, engaging articles efficiently.

Remember that prompt engineering is an iterative process. Don't be afraid to experiment, refine your techniques, and adapt your approach based on the results you achieve. With practice, you'll develop an intuitive sense for creating prompts that consistently yield excellent content.

As you integrate this approach into your workflow, you'll likely find that it not only boosts your productivity but also stimulates your creativity, allowing you to explore ideas and perspectives you might not have considered otherwise.

The future of content creation lies at the intersection of human expertise and AI capabilities. By embracing prompt engineering, you're positioning yourself at the forefront of this exciting frontier. So, start experimenting with these techniques today, and unlock new levels of efficiency and creativity in your article writing process.What happens next?

Guess what I do next? I read through this prompt carefully. Then I go for a break. A brew. A walk. Another task. Because the human brain takes to time to absorb and process this. Then, when I get back, or maybe even later/tomorrow, I then start working on the content. Usually, my final result is nothing like what the LLM came up with, but I wouldn’t have got to that final result, in the time I did, without the LLM.

12 things I do to improve the LLM output

- I tune the prompt.

- I work on the piece. I either get the LLM to write a draft, or I do an interactive semi-chain of thought / task break down approach. Depends on time, topic, etc.

- I have a break. My brain treats new things like a piece of brisket: cook it slow for best results.

- I work on the piece again. Don’t be afraid of changing a lot.

- I ask the LLM for feedback, ask it to reverse engineer the article — does it match the original prompt? What’s different? Plus other questions I think Dear Reader might have.

- I make the edits and see the finish line and the roaring crowd.

- Read the copy out aloud, sometimes I do an audio recording. Edit so it sounds natural.

- For long articles (this started out as 4,456 words, 24 minutes read time.) I will have the LLM write a TLDR and I’ll edit that.

- Consider breaking the article down into smaller articles in a series. I can always offer the single long-read, and a separate 5-part series.

- Find external related articles. You can use an internet-connected LLM to go find related resources if you paste your article in.

- I get someone I trust to have a read.

- I post it, making sure the on-page SEO and interlinking is correct.

- Get it to write a <55 word excerpt.

- Suggest meta description.

- Get ideas for a featured image.

- I get the LLM to atomize the article into social posts I can use.

- I post on social linking to the article, and using UTM codes to track.

I save the prompt into my library. Evolving your own prompts and examples one of the differences between a prompt engineering pro and everyone else.

5 useful applications of prompt engineering

I chose the article writing example because it’s what you’re reading, but there are more business applications of prompt engineering. Here’s some I use regularly:

Use case 1: Business loan application

Use case 2: Develop a mobile app MVP.

Coding co-pilots are all the rage, and while you might not get a production app out of a prompt engineering session, you can certainly get a prototype/MVP to test an idea fast with an audience. It also helps the app owner to get the ideas out of their head. This is a super use case.

Use case 3: Help with WordPress administration and information architecture

It’s amazing what LLMs know about WordPress. From helping me with Advanced CSS, to creating information architecture with Advanced Custom Fields — and if it doesn’t know (never happened to me), then I can paste in some documentation and it will help. Always test the results!

Use case 4: Repeating previous work for a new client

This is a classic productivity hack. If I repeat some form of work for a new client, then I will take my internal guide and anonymized previous example along with my custom prompt, then put in the new client’s requirements… and the LLM churns out v1 in no time. I’m on the right path and can do a better job faster than ever before.

Use case 5: Learning a new topic

Always watching youtubes and reading docs? What about if you let the LLM be your tutor? It’s amazing what these things know internally, but you can always supplement it with information you’ve found. Then prompt the LLM to teach you, breaking down the goal into lessons. Ask it to create quizzes for you. Examples. Homework it can mark!

How could this article be improved?

I pasted this article back into Claude and asked it a simple question: Can you give me feedback?

In return I got some suggestions on strengths and improvements. I haven’t applied this advice yet — I will do in a future version, which will also probably be split into a multi-part series.

Strengths

- Comprehensive coverage: The article covers a wide range of topics related to prompt engineering, from basic principles to advanced techniques and ethical considerations.

- Practical examples: The inclusion of case studies and specific prompt examples helps readers understand how to apply the concepts in real-world scenarios.

- Step-by-step guide: The article provides a clear, structured approach to crafting prompts for article creation, making it easy for readers to follow and implement.

- Addressing challenges: The section on overcoming common challenges is particularly useful for readers who may encounter difficulties in their prompt engineering journey.

- Ethical considerations: The article responsibly addresses ethical concerns and best practices, including transparency about AI usage.

- Quick start guide and exercises: These sections provide practical ways for readers to immediately apply what they’ve learned.

Improvements

Areas for potential improvement:

- Length: At 4,456 words, the article is quite long. Consider breaking it into a series of shorter, more focused articles to improve readability.

- Visual elements: The article could benefit from diagrams, flowcharts, or other visual aids to illustrate key concepts and processes.

- More diverse examples: While the case studies are helpful, including examples from different industries or use cases could broaden the article’s appeal.

- Technical depth: Depending on the target audience, you might consider adding more technical details about how LLMs process prompts or the underlying mechanisms of prompt engineering.

- Comparative analysis: A brief comparison of prompt engineering techniques across different LLM platforms could be valuable for readers.

- Updates on recent developments: Given how rapidly the field is evolving, including information on the latest trends or techniques in prompt engineering could enhance the article’s timeliness.

- User testimonials or expert opinions: Including quotes or insights from industry professionals who use prompt engineering could add credibility and real-world perspective.

Useful prompt engineering resources

- Prompt Engineering Guide at promptengineering.ai

- Prompt Engineering Best Practices: Tips, Tricks, and Tools by Digital Ocean

Did you find this guide helpful? Do you have your own prompt engineering tips or tricks? We’d love to hear from you! Drop a comment below or reach out via our contact form.

Contribute to Exciting Projects: Are you passionate about pushing the boundaries of AI and language models? We’re always looking for talented individuals to join our projects:

- Paid Opportunities: We have several funded research initiatives exploring advanced prompt engineering techniques.

- Owned Collaborations: We do short projects that are unpaid for the benefit for the people doing the work, to get some content or prototype out.

Stay Connected: Follow our journey and get the latest updates on prompt engineering:

Newsletter Sign-up: Don’t miss out on future articles, tutorials, and exclusive prompt engineering resources. Join our newsletter community: [Insert Newsletter Sign-up Form or Link]